UPDATE!: I had more I wanted to type but I need to rest my squishy meatfingers as they are tired. I will make a Tech Babble #17.1 post soon! :D

Hello! I apologise for the title pun. It's been a while since I made a tech babble! Yep, it's been ages, in fact. But what better time to type a tech babble than when my new medication has just kicked in (I also just finished a bottle of Lucozade) and I'm literally right here thinking about this subject. Oh, I have #18 planned, too, as I talked to myself (as usual) pacing in my living room about GCN and transistor per TFLOP, compute density and logic use vs RDNA and why CDNA is based on GCN and how RDNA was built for games, similar in nature to my Vega, Shaders and GCN babble but with updated Sash Knowledge! Yes!

Okay, this one is about AMD's relatively new graphics architecture, RDNA2. It launched in the Radeon RX 6000 series a few months ago to a really good reception and, honestly, surprised the crap out of me with how performant and efficient it is compared to the competition of the NVIDIA GeForce RTX 30 series. I felt like, with the Radeon RX 6900XT, AMD was gunning for the top-dog for the first time in a long time and, I know it wasn't, but I giggle and smile if I think 6900XT was made as an answer to the last point in my Radeon Wishlist that AMD's official Twitter account liked and replied to back when Sash had a Twitter account (Big mistake).

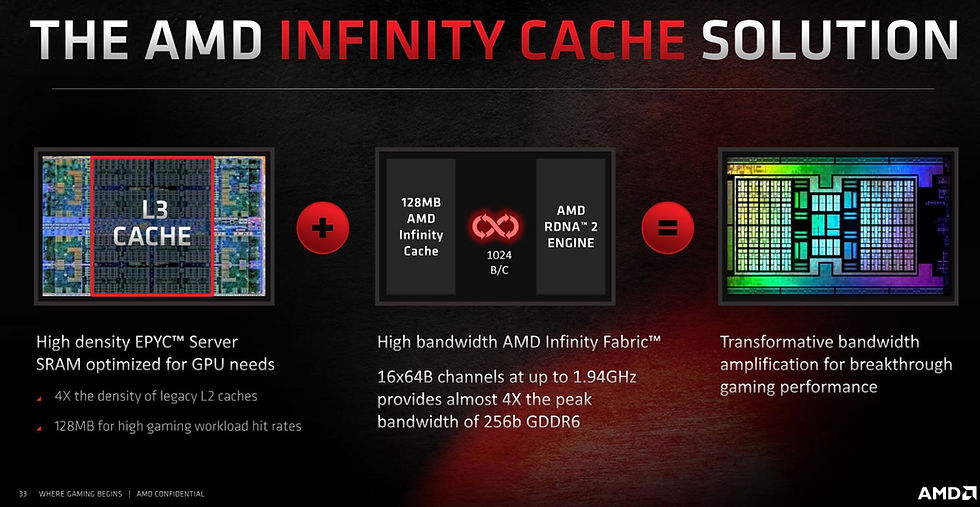

So without further ado, let's type a babble about RDNA2's biggest hardware feature, for me, (it's not even the Hardware Accelerated Ray Tracing!); the L3 cache, or as AMD calls it, the 'Infinity Cache'. While N21, 22 and I believe 23 have the IFC (I will call it that or L3), this post is about Navi 21 specifically, as this is the Big Chungus Boi that socks it to Nvidia for the first time in a unrefreshingly long time.

AMD loves Caches.

This is not something GPUs are historically known for, but here we are, in 2021 with a GPU and an L3 cache. In fact, what is interesting to note is that AMD has been working towards really fast, effective on-chip caches for their processors for a long time, kicking off with Zen1; which made use of a really effective L3 cache system and is in large part, why it does so well despite the fabric signal-routing latency innate to the Zen processor designs. (Yes, Zen1's L3 cache isn't notably bigger than competing designs at the time, but it WAS faster and an example of AMD's focus on caches coming to fruition).

Zen2 introduced the chungus L3 cache with 16MiB per 4-core CCX block, a whopping 64MiB per AM4 package (4x16MiB 4-core CCX in 2x CCD), and this is when AMD really took its gloves off with caches. Why am I babbling about CPU Caches in a GPU l3 cache babble post? Well, because it's very important to note the focus upon which AMD has set their talent on these past few years. And that is, as any processor engineer will say is the holy grail (in a way); keeping more data on the silicon, closer to the execution cores is extremely important. Even more so, when you consider that AMD's chiplet/MCM approach adds additional routing and trace length (small but it does add up) latency to larger off-die memory pools like DDR/GDDR. Caches help offset that.

Wait, Sash, Navi 21 isn't a chiplet or MCM, so... Don't interrupt me! I'm flowing. I'll come to that in a moment! Anyway! The deal is, AMD's design philosphy is around caches and making them really, really big, and really, really fast. Moving back to Navi 21, one of AMD's slides from their launch material hits me as underscoring AMD's nod to its proud design of caches from the Zen-class CPU cores.

AMD has been touting this 'Infinity' solution for its processor designs for a while. This includes the all-purpose data fabric/interconnect (the well-known Infinity Fabric) and now, this L3 cache system on RDNA2, specifically here, Navi 21; the largest implementation of that architecture in silicon.

My ideas for this babble are literally flowing faster than my pathetic organic hand-based appendages can facilitate linguistic transcription onto this computing device via physical contact with the human interface device known as a keyboard. They are also cold, which doesn't help. Oh well, back on the subject. What I wanted to say, is... (let's make a sub heading).

Chiplets and Caches?

Okay, before I go into some further details about Navi 21 specifically, and a test that I did with a friend regarding that GPU, I wanted to quickly type about how caches fit into (to what I have heard, and I believe) is AMD's design direction with processors. That is, Multi-Chip Modules (i.e, Naples) and Chiplet designs (I.e, Matisse, Vermeer on AM4). While we have yet to see a GPU implementation of MCM or chiplets that isn't on-package memory like HBM (I guess you could argue this is an MCM in a way), it is coming and I believe RDNA has been preparing for this since the start. I'll give a nod to Nemez from twitter, as they were the one that first gave me this idea after some discussion about RDNA2, and I have done a lot of thought on it and wanted to babble about it here!

Get to the point, Sash! What are you trying to say! Okay, I am trying to say that Caches are important to multi-die approaches and chiplets because those designs introduce innate latency as the signal from specific dies needs to be routed between them across data fabric and, essentially, it needs to be 'told' where it needs to go. (Similar to a router on your LAN), this process adds latency as the routing / passing through switches eats up clock cycles. Zen's always had much higher hard-memory latency than Intel's competing processor designs, and one of those reasons is because the data fabric (the Infinity Fabric) has a lot of work to do in making sure all those functional logic blocks talk to each other correctly without needing to design a bespoke interconnect for EVERY SINGLE CHIP implementation. That is why IF is so good, but you could consider it a 'jack of all trades, master of none'. In Fact, signal routing is an area where AMD is likely actively improving the fabric.

Latency: Routing vs distance?

I'll add something quickly here, and that is actual trace length on processor packages has a minimal impact on actual latency. That is, it is forgivable to think that the physical distance between, for example, Vermeer's CCD and its IOD, is what adds that extra memory latency. Well, this is true to an extent, but electronic signals travel so fast through metallic conductive mediums (significant portion of the Speed of Light, as they are electromagnetic radiation, after all), that the distance probably only adds a couple nanoseconds. The biggest latency increaser is actually the signal being routed around the chip, from the core's L1 -> L2 -> L3 cache, from there to the control logic on the CCD, to the PHY, across the substrate, to the IOD; where there is essentially a 'traffic control switch' that sends that memory request to the appropriate areas. The larger IODs with more CCDs connected have more complex arrangements of switches and thus, higher latency as there is more routing needing to happen.

An example of this in action (routing vs distance) is the memory latency between Summit Ridge (Zen1), Pinnacle Ridge (Zen+), Matisse (Zen2) and Vermeer (Zen3). The actual, hard latency at fixed memory clock / timings improved a bit from Zen1 to Zen+, due to improvements in the memory controller and lower internal (L2 and 3 specifically) cache latencies (you are seeking memory only after you've checked L1, L2 and L3 for hits). That is where you get that nice ~ -5ns reduction at the same settings on Ryzen 2700X vs, 1700X, for example. For the next comparison, remember that Summit and Pinnacle have on-chip memory controllers that are physically closer to the cores!

Matisse moves its memory controller to a separate piece of silicon called the I/O Die; and that is literally a good couple centimetres from the CCD where the cores are. The distance between the cores and memory controller (trace length) has likely increased by a good amount, but hard memory latency didn't increase almost at all. In fact, on Vermeer, it improved over Zen+, despite the latter being monolithic with an on-chip memory controller.

This is likely because Vermeer's IOD has some firmware improvements to internal signal routing over Matisse (which already improved that over Zen1). The other reason is the Fabric width is doubled on the chiplet designs, from 256b to 512b for PCI-E 4.0 support on data transmission to and from the CPU's PCI-E root complex. Anyway, the point is, moving memory controller off-die didn't hurt hard latency in any significant way; and that is measuring with synthetic programs that deliberately miss internal caches. More on that shortly....

Caches hide memory latency lower bandwidth effectively. Navi 21's secret weapon (why it is NOT bandwidth limited).

An important point to make, is that actual use-case of processors (no, AIDA64 memory latency benchmark is not a valid actual use-case) do not deliberately use data that spills out of internal caches as that is pretty dumb (afaik). This subject is important to note on Navi 21 and the Zen2/3 CPUs because it is often a mistake people make when they see Radeon RX 6900XT with "only" 256-bit and 512GB/s (theoretical) to its GDDR6 memory and say it's "Bandwidth limited!" That is where they are wrong, and that is what I really wanted to get at with this babble, that also draws comparison to the Zen2 and 3 designs.

Now, how to explain this?

I think a diagram might be useful, so I will make one in a moment (or not, we will see). Essentially, when you say "Memory Bandwidth"; you are making a very broad statement and more often than not, people use that term to refer to main memory of the processor, such as the DDR4 on AM4 and the GDDR6 on Radeon 6000. That's fair enough, but that is also not how these processors work, and as such, "Memory bandwidth" from that perspective gives us a little bit of insight but its almost arbitrary when you take the processor's entire memory system into account.

That's right, that includes caches.

The processor doesn't care about what raw bandwidth it has specifically to main memory, it cares about the effective bandwidth of the entire memory subsystem, in a variety of workloads from the internal registers, through L1, 2 and 3 (the latter is the secret sauce of RDNA2), and then into the main memory. To explain why saying that 512GB/s on 6900XT and 930GB/s on 3090 means that 6900XT has to be memory limited, is wrong, you have to think of the processor memory like this:

Instead of using raw, arbitrary peak bandwidth numbers as the basis for your comparison, take a look at a fixed length of time in a normal workload on thsi GPU, and the requests to and from memory during that fixed time, including internal caches and their effect on the available bandwidth of main memory.

To make that simple, in my understanding, let's say 1 second of rendering some frames for your video game. In that 1 second, you have drawn 60 frames, for example. Okay, so let's break this down (as I am not going to count the register / l0 caches for Navi and the l1 caches for Ampere, let's start with l2, though it is important to note that RDNA has an extra cache layer below the L3, known as L1 (l1 on Nvidia is comparable to l0 on RDNA afaik).

Navi 21 (6900XT) memory accesses:

up to 4MiB L2 - >2TB/s

up to 128MiB L3 - >1TB/s

up to 16GiB Memory - 512GB/s (theoretical)

GA102 (3090) memory accesses:

up to 6MiB L2 - >2TB/s

up to 24GiB Memory - 936GB/s (theoretical)

Okay with that in mind, if you look at just the memory bandwidth, you might think, oh, Navi 21 is at a huge deficit, right? Well, yeah, but you're literally cutting off an extra layer of the memory subsystem that the GPU includes in the total effective bandwidth and that is not a fair comparison. (To the person who I love, who will probably read this (if you are NOT that person, please ignore this, as it will make no sense:

"The Cache hides the weakness of the core!" :D

Moving on, so, the effective bandwidth as I said, cannot be measured by arbitrary theoretical memory numbers in a single "instant", but is better measured by the effective bandwidth from all cache layers + memory over a fixed amount of time, like a second. In this case, we can break it down further to assess how Navi 21 actually has more actual effective bandwidth on tap that GA102 despite just over half the raw theoretical main memory bandwidth.

Okay, let's do this. For a quick disclaimer, I'm going to say that I'm going to make up these numbers for example's sake, and this is just an example of a concept, don't take these numbers as hard facts of graphics workloads! Thanks!

AMD's data says that Navi 21's (on 6800XT, it has less WGPs so it would be a bit higher) hit rate the the 128MiB L3 cache is 58%. That is a pretty big deal. Where's that slide?

There it is, average hit rate for 4K titles is 58% on Infinity Cache access. Why is that a big deal? Well, what I am going to explain is actually staring you in the face right there from AMD's own slide. You see the "effective bandwidth" is actually made up of G6 + Cache? well that's exactly what I wanted to re-iterate here with my silly example.

So here it is:

Say in this 1 second of rendering graphics frames, you have "10000"of traffic (remember I said it's just an example number, don't judge me) needing to be shifted too and from the main memory. Starting from L2 cache, some this bandwidth is going to be absorbed by the L2 cache, in that 1 second, the really small data accesses will be absorbed by the L2 and essentially be deducted from the total bandwidth requirement for that 1 second of work. For this to work really, we need to know the L2's hit rate, but I am going to go on a ballpark guess and say it's probably like 70-80% hit rate (THIS IS A GUESS DO NOT TAKE IT AS FACT), if AMD's numbers are anything to go by at 58% for 128MiB, at 4K, this means a pretty damn large percentage of those memory requests are quite small <128MB.

So, because this will get really, really complex really quick and I can't math to save my life, I'm going to simplify this and say, GA102 has a major advantage at the L2 level (+50%) capacity, but it also has WAY more resources, so the ratio of resources to cache is probably closer to being even. So, saying they are similar at L2 level, we can subtract, say "6000" from the total bandwidth needed in that 1 second of work (remember?) as it has been eaten by the super fast L2 cache....

Sash note: As I mentioned earlier, RDNA1 and 2 have actually got another cache layer below L2 that Turing/Ampere do not have. AMD knows this as "L1" where the WGP/CU caches are then pushed to an "L0" layer. This actually means that internally (from AMD's slides) Navi 21 has a further 1MB of cache offsetting this traffic to L2, meaning even though GA102 has more L2 Cache (+50%), due to having more resources requesting access and lack of the L0->L1 layer (AFAIK), the L2 bandwidth pressure/load might be similar, or, actually LOWER on Navi 21, Food for thought on AMD's INSANE CACHES!) ...

But I'll be even at L2 for ARGUMENT'S SAKE!

Navi 21:

"10000" traffic - "6000" (L2 cache) = "4000" traffic.

GA102:

"10000" traffic - "6000" (L2 cache) = "4000" traffic.

Okay, so our L2 has eaten some 8000 of that traffic, so from the 10000 total traffic in that 1 second we now have 4000 left to satisfy. (I re-iterate this is not bandwidth, or a representation of cache hit rates, as I said, l2 is likely 70%+ (guess) the number is mainly used as a representation of how caches lower load on memory layers above them, meaning that they can do more things with the bandwidth they already have (more bandwidth per bandwidth! Bandwidth-ception!)

Right, this is where it gets interesting. Sure, if Navi 21 didn't have an L3 cache layer, it would be at a huge disadvantage in traffic handling in that 1 second of work, because with 4000 going in, you have literally near half the bandwidth to handle that work to main memory (256b 16Gbps vs 384b 19.5Gbps). BUT! (remember my numbers are example for concept of memory load absorption only! they do NOT represent real data figures! (though I tried to reflect the % hit rates a tiny bit :3)

Navi 21:

"10000" traffic - "6000" (L2 cache) = "4000" traffic.

"4000" traffic - "2500" (L3 cache) = "1500 traffic"

Main memory is dealing with "1500" traffic at 500 traffic per tick

GA102:

"10000" traffic - "6000" (L2 cache) = "4000" traffic.

Main memory is dealing with "4000" traffic at 900 traffic per tick

Oh, what's this? yes, the traffic per second is similar in ratio to the raw bandwidth difference on main memory between these processors, since it's relevant. This is why it's important to remember that over a period of time is more important than an instant straight to main memory. Above, you can see that even though Navi 21 has a lot lower "traffic per tick" to eat that last bit of traffic, it has much less overall traffic to deal with, as that L3 cache has absorbed a HUGE chunk of it!

This, in effective, means that even though are looking at a main memory deficit of 936GB/s vs 512GB/s; Navi 21's memory doesn't have to handle those super-fast traffic-hogging requests up to 128MB in sized because the Infinity Cache absorbed them. So overall, it has more spare bandwidth to throw at the stuff that really needs to go into the main memory. While GA102 is beefier on raw to memory, its memory is being ganked by those requests that Navi 21 doesn't need to do, meaning that all that raw bandwidth advantage is eaten away and it actually ends up handling less traffic with a lot more raw bandwidth.

And this is why Navi 21 has more effective bandwidth. And it's not even like 16Gbps over 256-bit is low, anyway. Long story short, if you take the entire memory subsystem into account, including caches, Navi 21's composite bandwidth across the entire spectrum of access requests from tiny <1MB ones to huge (example) 200MB+ ones is a lot higher because it has a lot more internal bandwidth to absorb that before it hits the lower external bandwidth, freeing up more of it for stuff that is truly too big to fit in the internal caches.

Here's an obligatory meme for your enjoyment.

I'm going to stop now because my mum's home and I want to hug her. Toodles!

Thanks for reading! Meow! ^-^"

Comments